SIGGRAPH 2024 (Conference Track)

LayGA: Layered Gaussian Avatars for Animatable Clothing Transfer

Siyou Lin1, Zhe Li1, Zhaoqi Su1, Zerong Zheng2, Hongwen Zhang3, Yebin Liu1

1Tsinghua University

2NNKosmos Technology

3Beijing Normal University

Paper | Supp

Abstract

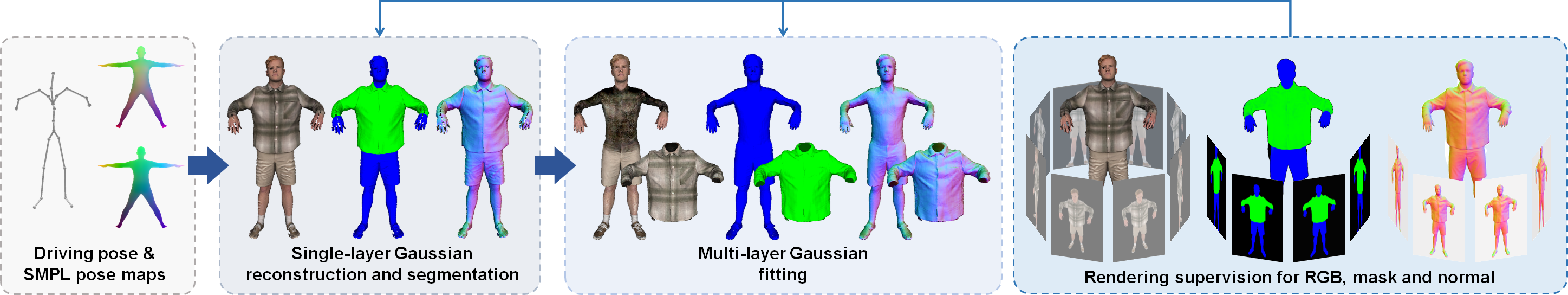

Animatable clothing transfer, aiming at dressing and animating garments across characters, is a challenging problem. Most human avatar works entangle the representations of the human body and clothing together, which leads to difficulties for virtual try-on across identities. What’s worse, the entangled representations usually fail to exactly track the sliding motion of garments. To overcome these limitations, we present Layered Gaussian Avatars (LayGA), a new representation that formulates body and clothing as two separate layers for photorealistic animatable clothing transfer from multiview videos. Our representation is built upon the Gaussian map-based avatar for its excellent representation power of garment details. However, the Gaussian map produces unstructured 3D Gaussians distributed around the actual surface. The absence of a smooth explicit surface raises challenges in accurate garment tracking and collision handling between body and garments. Therefore, we propose two-stage training involving single-layer reconstruction and multi-layer fitting. In the single-layer reconstruction stage, we propose a series of geometric constraints to reconstruct smooth surfaces and simultaneously obtain the segmentation between body and clothing. Next, in the multi-layer fitting stage, we train two separate models to represent body and clothing and utilize the reconstructed clothing geometries as 3D supervision for more accurate garment tracking. Furthermore, we propose geometry and rendering layers for both high-quality geometric reconstruction and high-fidelity rendering. Overall, the proposed LayGA realizes photorealistic animations and virtual try-on, and outperforms other baseline methods.

Method

We build our model upon the state-of-the-art Gaussian-based animatable human avatar [Li et al. 2024], and extend it in two ways:

- We improve the geometric reconstruction quality of Gaussian avatars by incorporating image-based normal loss and other geometric regularizations;

- Based on the improved Gaussian representation with smoothly reconstructed geometry, we build layered avatars and design a collision resolving strategy that allows animatable clothing transfer.

Our pipeline is two-stage:

- Stage 1: Single-layer reconstruction and segmentation for guiding layered reconstruction;

- Stage 2: Multi-layer avatar training with separate body and garment models.

Demo Video

Citation

@inproceedings{lin2024layga,

title={LayGA: Layered Gaussian Avatars for Animatable Clothing Transfer},

author={Lin, Siyou and Li, Zhe and Su, Zhaoqi and Zheng, Zerong and Zhang, Hongwen and Liu, Yebin},

booktitle={SIGGRAPH Conference Papers},

year={2024}

}